Actor and Action Video Segmentation from a Sentence

Abstract

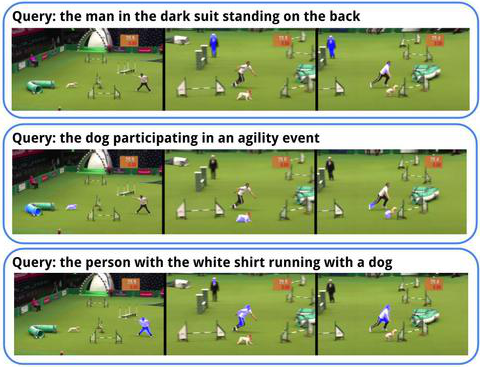

This paper strives for pixel-level segmentation of actors and their actions in video content. Different from existing works, which all learn to segment from a fixed vocabulary of actor and action pairs, we infer the segmentation from a natural language input sentence. This allows to distinguish between fine-grained actors in the same super-category, identify actor and action instances, and segment pairs that are outside of the actor and action vocabulary. We propose a fully-convolutional model for pixel-level actor and action segmentation using an encoder-decoder architecture optimized for video. To show the potential of actor and action video segmentation from a sentence, we extend two popular actor and action datasets with more than 7,500 natural language descriptions. Experiments demonstrate the quality of the sentence-guided segmentations, the generalization ability of our model, and its advantage for traditional actor and action segmentation compared to the state-of-the-art.

Datasets

A2D Sentences

We have extended Actor and Action (A2D) Dataset with additional description of every object is doing in the videos. We provide three files containing our annotation:

a2d_annotation.txt contains annotation in the format “video_id,instance_id,query” where:

“video_id” - the original id of the video from the A2D dataset

“instance_id” - the id of the object in the video that we have added to the original annotation

“query” - the description of what object is doing throughout the whole video (see the paper for more details)

a2d_annotation_with_instances.zip - the original annotation from the A2D dataset in HDF5 with the field “instance” added. This field corresponds to “instance_id” field in the a2d_annotation.txt file.

a2d_missed_videos.txt contains all the videos that were not annotated with descriptions and therefore were excluded from experiments in the paper.

J-HMDB Sentences

We have extended J-HMDB Dataset with additional description of every human is doing in the videos:

jhmdb_annotation.txt contains annotation in the format “video_id,query”:

“video_id” - the original id of the video from the J-HMDB dataset

“query” - the description of what human is doing throughout the whole video (see the paper for more details)